As viewers consume media across an increasing array of platforms and devices, the demand for real-time, high-quality video experiences continues to grow. Whether for broadcasts supported by IP networks, OTT video services, or IP-based video networks facilitating video distribution across venues, low latency is a vital enabler of engaging viewing experiences.

In applications such as live production, playout, and content delivery, latency influences both operational success and audience satisfaction. Live production itself requires precise timing and synchronization, and content delivery — a fast-paced sports broadcast, news update, live concert, or worship service, as well as live OTT content in general — demands immediate, near-instantaneous delivery of audio and video. Monitoring latency and keeping delay low thus are vital to maintaining media workflows and compelling viewer experiences.

The Basics of Latency

Latency refers to the delay introduced at various stages of signal processing in media workflows. In studio or media control room (MCR) environments, this delay is measured in milliseconds, reflecting the time it takes for an image to travel from the camera through switchers, encoders, and transport systems to appear on a multiviewer display or another operator monitor.

For operators working in live production, perceptible lag caused by latency can disrupt workflows or cause elements of a broadcast to appear out of sync. This is problematic especially for technical directors (TDs), replay editors, and other operators who rely on immediate feedback from control systems as they create a live show. Delay can lead to repeated commands or confusion that undermines both practical and creative aspects of production.

While latency in live production is measured in milliseconds, latency in OTT delivery often spans seconds — the delay between the instant content is captured or generated and the moment it’s displayed on the viewer’s screen. Latency in OTT delivery arises from the need to buffer for adaptive streaming, multi-device delivery, and content distribution over large networks.

For audiences, higher latencies can compromise the viewing experience, be it in a sports or entertainment venue or on a TV screen, computer, or mobile device. To prevent disorienting delay for fans in a sports arena, for example, end-to-end latency between the action on the field and the image on in-venue displays, such as a Jumbotron, must be minimal. All audio and visual elements must be immediate, and in sync.

For OTT delivery to viewers at home or on the go, highly variable latency causes inconsistent delivery speeds and viewing experiences across different services, platforms, and devices. (And no one wants to receive spoilers through smartphone notifications or hear their neighbors celebrating a goal before they see it themselves!) Addressing this latency involves distinct considerations such as managing CDN performance, reducing segment durations, and optimizing encoding for delivery platforms.

Addressing Latency From End to End

Latency doesn’t originate from a single source; rather, it accumulates across the entire media pipeline.

Providing engineers with comprehensive visibility across the entire production chain, the TAG Realtime Monitoring Platform excels at monitoring latency as it accumulates across workflows. Tools within the TAG platform ensure robust monitoring across the live production and content delivery environments, enabling engineers to track delays and ensure consistent end-to-end performance.

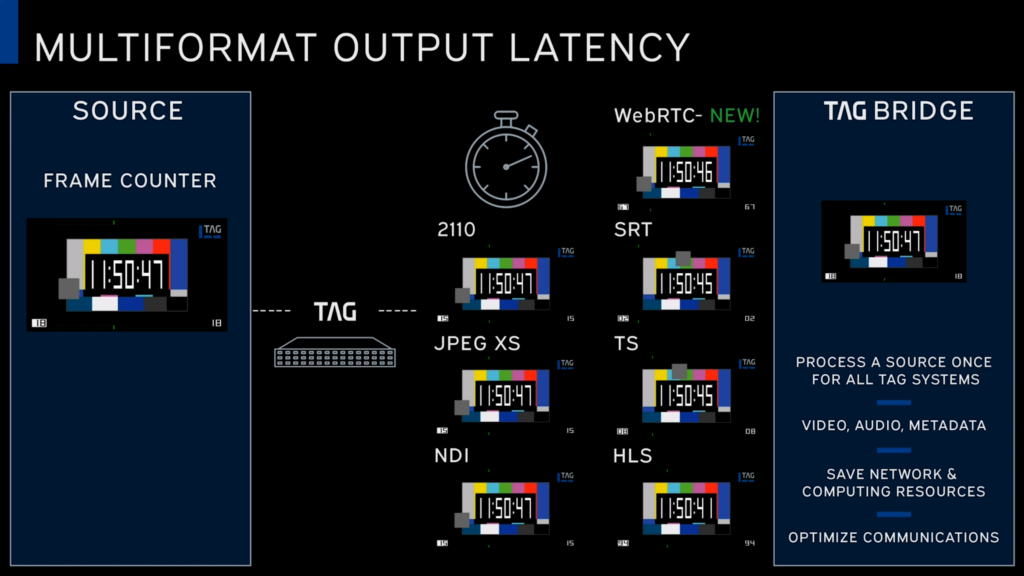

In live production, TAG supports low-latency visualizations (e.g., mosaics) over protocols such as ST 2110 and WebRTC, providing engineers with clear, real-time insights into their workflows. While TAG is not focused on directly reducing latency, the TAG platform does ensure visibility and operational efficiency in environments in which rapid issue identification is critically important. For playout and delivery, where optimizing latency has a direct impact on the viewer experience, TAG provides tools that that support measurement, monitoring, and optimization of latency across the delivery chain.

For specific delay identification and analysis, tools such as TAG’s VALID (Video and Audio Latency Identification and Display) play a role in measuring and verifying workflow performance. VALID embeds unique identifiers or markers into video and audio streams at specific points in the workflow. As these streams traverse the IP network, VALID calculates the latency introduced at each segment. This real-time analysis enables engineers to pinpoint delays, assess synchronization between audio and video, and ensure that the end-to-end workflow adheres to low-latency requirements.

TAG tools help engineers to recognize and minimize latency during distribution. However, once the content reaches the playback platform or device — such as a cable or satellite set-top box, OTT application (e.g., Roku or Apple TV), or a smart TV — the latency becomes influenced by the specific hardware, software, and network conditions of that playback environment. At this stage, delays are often measured in seconds or, in some cases, even longer, depending on buffering requirements and other factors.

Managing Latency in Complex Environments

As content moves from production through the delivery chain, encoding and compression are significant factors, with choices between uncompressed protocols like SMPTE ST 2110 and compressed formats such as SRT or WebRTC directly influencing latency. Uncompressed formats deliver ultra-low latency but come with high infrastructure costs, whereas compressed protocols are more cost-effective but introduce higher delay.

Network and distribution variables also contribute to latency. Factors such as buffer sizes, protocol overheads (e.g., HLS vs. SRT), and CDN configurations affect delivery speed. While they are robust, protocols like HLS can create significant delays due to their segment-based structure. Low-latency streaming alternatives can reduce this delay but require careful tuning of cache and buffer settings. Addressing these factors to facilitate optimized OTT delivery, TAG monitoring tools enable engineers to track latency throughout the workflow, ensuring audio-video sync and consistent playback across platforms.

The complexity of modern workflows amplifies the potential for added latency, particularly because productions today often mix and match formats including ST 2110, JPEG XS, SRT, and NDI. The TAG platform excels in such environments, delivering both flexibility and valuable mixed-format monitoring capabilities.

Unlike traditional monitoring systems optimized for uncompressed formats, the TAG Realtime Monitoring Platform supports a range of protocols, including uncompressed formats like ST 2110 and compressed ones such as SRT, WebRTC, and NDI. This versatility allows TAG users to monitor feeds in their native format before conversion while also handling post-conversion outputs. For example, in a hybrid workflow that combines ST 2110 and SRT, operators can inspect the quality of compressed streams alongside uncompressed ones. This capability helps operators to identify issues early and to maintain operational efficiency.

Balancing Latency and Cost: A Pragmatic Approach

Latency remains a defining factor in the success of modern media workflows. While ultra-low latency may be non-negotiable in top-tier live production, most workflows benefit from a balanced approach that considers both cost and operational flexibility.

Achieving the lowest possible latency comes at a cost, and often a steep one. Workflows using SMPTE ST 2110 and JPEG XS deliver exceptionally low latency but require significant investment in dedicated hardware — costly switches and expensive encoding/decoding channels. This high-end infrastructure is often reserved for major networks or top-tier events.

For mid-tier productions or smaller markets, the balance shifts. While a compressed workflow based on SRT, NDI, or WebRTC may increase latency, it also can reduce costs dramatically, from millions of dollars to hundreds of thousands. For productions or media organizations that do not require ultra-low latency, these trade-offs make compressed workflows a more viable option — and an attractive business solution.

Uniquely versatile in its ability to decode, monitor, and probe across formats and protocols, TAG gives operators and engineers the ability to navigate these trade-offs. Offering unparalleled visibility, the TAG platform is essential for organizations seeking to monitor and optimize their end-to-end IP workflows. Whether for live broadcasts, playout, or OTT delivery, TAG provides — in a single solution — the tools critical to understanding and managing latency in a way that elevates operations and audience impact.